As frequent readers of my blog may probably already know, I have been using

Nix-related tools for quite some time to solve many of my deployment automation problems.

Although I have worked in environments in which Nix and its

related sub projects are well-known, when I show some of Nix's use cases to larger groups of DevOps-minded people, a frequent answer I that have been hearing is that it looks very similar to

Docker. People also often ask me what advantages Nix has over Docker.

So far, I have not even covered Docker once on my blog, despite its popularity, including very popular sister projects such as

docker-compose and

Kubernetes.

The main reason why I never wrote anything about Docker is not because I do not know about it or how to use it, but simply because I never had any notable use cases that would lead to something publishable -- most of my problems for which Docker could be a solution, I solved it by other means, typically by using a Nix-based solution somewhere in the solution stack.

Docker is a container-based deployment solution, that was not the first (

neither in the Linux world, nor in the UNIX-world in general), but since its introduction in 2013 it has grown very rapidly in popularity. I believe its popularity can be mainly attributed to its ease of usage and its extendable images ecosystem:

Docker Hub.

In fact, Docker (and Kubernetes, a container orchestration solution that incorporates Docker) have become so popular, that they have set a new standard when it comes to organizing systems and automating deployment -- today, in many environments, I have the feeling that it is no longer the question what kind of deployment solution is best for a particular system and organization, but rather: "how do we get it into containers?".

The same thing applies to the "

microservices paradigm" that should facilitate modular systems. If I compare the characteristics of microservices with the definition a "software component" by

Clemens Szyperski's Component Software book, then I would argue that they have more in common than they are different.

One of the reasons why I think microservices are considered to be a success (or at least considered moderately more successful by some over older concepts, such as web services and software components) is because they easily map to a container, that can be conveniently managed with Docker. For some people, a microservice and a Docker container are pretty much the same things.

Modular software systems have all kinds of advantages, but its biggest disadvantage is that the deployment of a system becomes more complicated as the amount of components and dependencies grow. With Docker containers this problem can be (somewhat) addressed in a convenient way.

In this blog post, I will provide my view on Nix and Docker -- I will elaborate about some of their key concepts, explain in what ways they are different and similar, and I will show some use-cases in which both solutions can be combined to achieve interesting results.

Application domains

Nix and Docker are both deployment solutions for slightly different, but also somewhat overlapping, application domains.

The Nix package manager (on the recently

revised homepage) advertises itself as follows:

Nix is a powerful package manager for Linux and other Unix systems that makes package management reliable and reproducible. Share your development and build environments across different machines.

whereas Docker advertises itself as follows (in the

getting started guide):

Docker is an open platform for developing, shipping, and running applications.

To summarize my interpretations of the descriptions:

- Nix's chief responsibility is as its description implies: package management and provides a collection of software tools that automates the process of installing, upgrading, configuring, and removing computer programs for a computer's operating system in a consistent manner.

There are two properties that set Nix apart from most other package management solutions. First, Nix is also a source-based package manager -- it can be used as a tool to construct packages from source code and their dependencies, by invoking build scripts in "pure build environments".

Moreover, it borrows concepts from purely functional programming languages to make deployments reproducible, reliable and efficient. - Docker's chief responsibility is much broader than package management -- Docker facilitates full process/service life-cycle management. Package management can be considered to be a sub problem of this domain, as I will explain later in this blog post.

Although both solutions map to slightly different domains, there is one prominent objective that both solutions have in common. They both facilitate

reproducible deployment.

With Nix the goal is that if you build a package from source code and a set of dependencies and perform the same build with the same inputs on a different machine, their build results should be (nearly) bit-identical.

With Docker, the objective is to facilitate reproducible environments for running applications -- when running an application container on one machine that provides Docker, and running the same application container on another machine, they both should work in an identical way.

Although both solutions facilitate reproducible deployments, their reproducibility properties are based on different kinds of concepts. I will explain more about them in the next sections.

Nix concepts

As explained earlier, Nix is a source-based package manager that borrows concepts from purely functional programming languages. Packages are built from build recipes called Nix expressions, such as:

with import <nixpkgs> {};

stdenv.mkDerivation {

name = "file-5.38";

src = fetchurl {

url = "ftp://ftp.astron.com/pub/file/file-5.38.tar.gz";

sha256 = "0d7s376b4xqymnrsjxi3nsv3f5v89pzfspzml2pcajdk5by2yg2r";

};

buildInputs = [ zlib ];

meta = {

homepage = https://darwinsys.com/file;

description = "A program that shows the type of files";

};

}

The above Nix expression invokes the function:

stdenv.mkDerivation that creates a build environment in which we build the package:

file from source code:

- The name parameter provides the package name.

- The src parameter invokes the fetchurl function that specifies where to download the source tarball from.

- buildInputs refers to the build-time dependencies that the package needs. The file package only uses one dependency: zlib that provides deflate compression support.

The buildInputs parameter is used to automatically configure the build environment in such a way that zlib can be found as a library dependency by the build script. - The meta parameter specifies the package's meta data. Meta data is used by Nix to provide information about the package, but it is not used by the build script.

The Nix expression does not specify any build instructions -- if no build instructions were provided, the

stdenv.mkDerivation function will execute the standard

GNU Autotools build procedure:

./configure; make; make install.

Nix combines several concepts to make builds more reliable and reproducible.

Foremost, packages managed by Nix are stored in a so-called

Nix store (

/nix/store) in which every package build resides in its own directory.

When we build the above Nix expression with the following command:

$ nix-build file.nix

then we may get the following Nix store path as output:

/nix/store/6rcg0zgqyn2v1ypd46hlvngaf5lgqk9g-file-5.38

Each entry in the Nix store has a SHA256 hash prefix (e.g.

ypag3bh7y7i15xf24zihr343wi6x5i6g) that is derived from all

build inputs used to build a package.

If we would build

file, for example, with a different build script or different version of

zlib then the resulting Nix store prefix will be different. As a result, we can safely store multiple versions and variants of the same package next to each other, because they will never share the same name.

Because each package resides in its own directory in the Nix store, rather than global directories that are commonly used on conventional Linux systems, such as

/bin and

/lib, we get stricter

purity guarantees -- dependencies can typically not be found if they have not been specified in any of the search environment variables (e.g.

PATH) or provided as build parameters.

In conventional Linux systems, package builds might still accidentally succeed if they unknowingly use an undeclared dependency. When deploying such a package to another system that does not have this undeclared dependency installed, the package might not work properly or not all.

In simple single-user Nix installations, builds typically get executed in an environment in which most environment variables (including search path environment variables, such as

PATH) are

cleared or set to dummy variables.

Build abstraction functions (such as

stdenv.mkDerivation) will populate the search path environment variables (e.g.

PATH,

CLASSPATH,

PYTHONPATH etc.) and configure build parameters to ensure that the dependencies in the Nix store can be found.

Builds are only allowed to write in the build directory or designated output folders in the Nix store.

When a build has completed successfully, their results are made immutable (by removing their write permission bits in the Nix store) and their timestamps are reset to 1 second after the

epoch (to improve build determinism).

Storing packages in isolation and providing an environment with cleared environment variables is obviously not a guarantee that builds will be pure. For example, build scripts may still have hard-coded absolute paths to executables on the host system, such as

/bin/install and a C compiler may still implicitly search for headers in

/usr/include.

To alleviate the problem with hard-coded global directory references, some common build utilities, such as GCC, deployed by Nix have been patched to ignore global directories, such as

/usr/include.

When using

Nix in multi-user mode, extra precautions have been taken to ensure build purity:

- Each build will run as an unprivileged user, that do not have any write access to any directory but its own build directory and the designated output Nix store paths.

- On Linux, optionally a build can run in a chroot environment, that completely disables access to all global directories in the build process. In addition, all Nix store paths of all dependencies will be bind mounted, preventing the build process to still access undeclared dependencies in the Nix store (changes will be slim that you encounter such a build, but still...)

- On Linux kernels that support namespaces, the Nix build environment will use them to improve build purity.

The network namespace helps the Nix builder to prevent a build process from accessing the network -- when a build process downloads an undeclared dependency from a remote location, we cannot be sure that we get a predictable result.

In Nix, only builds that are so-called fixed output derivations (whose output hashes need to be known in advance) are allowed to download files from remote locations, because their output results can be verified.

(As a sidenote: namespaces are also intensively used by Docker containers, as I will explain in the next section.) - On macOS, builds can optionally be executed in an app sandbox, that can also be used to restrict access to various kinds of shared resources, such as network access.

Besides isolation, using hash code prefixes have another advantage. Because every build with the same hash code is (nearly) bit identical, it also provides a nice optimization feature.

When we evaluate a Nix expression and the resulting hash code is identical to a valid Nix store path, then we do not have to build the package again -- because it is bit identical, we can simply return the Nix store path of the package that is already in the Nix store.

This property is also used by Nix to facilitate transparent

binary package deployments. If we want to build a package with a certain hash prefix, and we know that another machine or binary cache already has this package in its Nix store, then we can download a binary

substitute.

Another interesting benefit of using hash codes is that we can also identify the

runtime dependencies that a package needs -- if a Nix store path contains references to other Nix store paths, then we know that these are runtime dependencies of the corresponding package.

Scanning for Nix store paths may sound scary, but there is a very slim change that a hash code string represents something else. In practice, it works really well.

For example, the following shell command shows all the runtime dependencies of the

file package:

$ nix-store -qR /nix/store/6rcg0zgqyn2v1ypd46hlvngaf5lgqk9g-file-5.38

/nix/store/y8n2b9nwjrgfx3kvi3vywvfib2cw5xa6-libunistring-0.9.10

/nix/store/fhg84pzckx2igmcsvg92x1wpvl1dmybf-libidn2-2.3.0

/nix/store/bqbg6hb2jsl3kvf6jgmgfdqy06fpjrrn-glibc-2.30

/nix/store/5x6l9xm5dp6v113dpfv673qvhwjyb7p5-zlib-1.2.11

/nix/store/6rcg0zgqyn2v1ypd46hlvngaf5lgqk9g-file-5.38

If we query the dependencies of another package that is built from the same Nix packages set, such as

cpio:

$ nix-store -qR /nix/store/bzm0mszhvbr6hp4gmar4czsn52hz07q1-cpio-2.13

/nix/store/y8n2b9nwjrgfx3kvi3vywvfib2cw5xa6-libunistring-0.9.10

/nix/store/fhg84pzckx2igmcsvg92x1wpvl1dmybf-libidn2-2.3.0

/nix/store/bqbg6hb2jsl3kvf6jgmgfdqy06fpjrrn-glibc-2.30

/nix/store/bzm0mszhvbr6hp4gmar4czsn52hz07q1-cpio-2.13

When looking at the outputs above, you may probably notice that both

bash and

cpio share the same kinds of dependencies (e.g.

libidn2,

libunisting and

glibc), with the same hash code prefixes. Because they are same Nix store paths, they are shared on disk (and in RAM, because the operating system caches the same files in memory) leading to more efficient disk and RAM usage.

The fact that we can detect references to Nix store paths is because packages in the Nix package repository use an unorthodox form of

static linking.

For example, ELF executables built with Nix have the store paths of their library dependencies in their

RPATH header values (the

ld command in Nixpkgs has been wrapped to transparently augment libraries to a binary's RPATH).

Python programs (and other programs written in interpreted languages) typically use wrapper scripts that set the

PYTHONPATH (or equivalent) environment variables to contain Nix store paths providing the dependencies.

Docker concepts

The

Docker overview page states the following about what Docker can do:

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects.

Although you can create many kinds of objects with Docker, the two most important objects are the following:

- Images. The overview page states: "An image is a read-only template with instructions for creating a Docker container.".

To more accurately describe what this means is that images are created from build recipes called Dockerfiles. They produce self-contained root file systems containing all necessary files to run a program, such as binaries, libraries, configuration files etc. The resulting image itself is immutable (read only) and cannot change after it has been built. - Containers. The overview gives the following description: "A container is a runnable instance of an image".

More specifically, this means that the life-cycle (whether a container is in a started or stopped state) is bound to the life-cycle of a root process, that runs in a (somewhat) isolated environment using the content of a Docker image as its root file system.

Besides the object types explained above, there are many more kinds objects, such as volumes (that can mount a directory from the host file system to a path in the container), and port forwardings from the host system to a container. For more information about these remaining objects, consult the Docker documentation.

Docker combines several concepts to facilitate reproducible and reliable container deployment. To be able to isolate containers from each other, it uses several kinds of Linux namespaces:

- The mount namespace: This is in IMO the most important name space. After setting up a private mount namespace, every subsequent mount that we make will be visible in the container, but not to other containers/processes that are in a different mount name space.

A private mount namespace is used to mount a new root file system (the contents of the Docker image) with all essential system software and other artifacts to run an application, that is different from the host system's root file system. - The Process ID (PID) namespace facilitates process isolation. A process/container with a private PID namespace will not be able to see or control the host system's processes (the opposite is actually possible).

- The network namespace separates network interfaces from the host system. In a private network namespace, a container has one or more private network interfaces with their own IP addresses, port assignments and firewall settings.

As a result, a service such as the Apache HTTP server in a Docker container can bind to port 80 without conflicting with another HTTP server that binds to the same port on the host system or in another container instance. - The Inter-Process Communication (IPC) namespace separates the ability for processes to communicate with each other via the SHM family of functions to establish a range of shared memory between the two processes.

- The UTS namespace isolates kernel and version identifiers.

Another important concept that containers use are

cgroups that can be use to limit the amount of system resources that containers can use, such as the amount of RAM.

Finally, to optimize/reduce storage overhead, Docker uses layers and a union filesystem (there are a variety of file system options for this) to combine these layers by "stacking" them on top of each other.

A running container basically mounts an image's read-only layers on top of each other, and keeps the final layer writable so that processes in the container can create and modify files on the system.

Whenever you construct an image from a

Dockerfile, each modification operation generates a new layer. Each layer is immutable (it will never change after it has been created) and is uniquely identifiable with a hash code, similar to Nix store paths.

For example, we can build an image with the following

Dockerfile that deploys and runs the Apache HTTP server on a

Debian Buster Linux distribution:

FROM debian:buster

RUN apt-get update

RUN apt-get install -y apache2

ADD index.html /var/www/html

CMD ["apachectl", "-D", "FOREGROUND"]

EXPOSE 80/tcp

The above

Dockerfile executes the following steps:

- It takes the debian:buster image from Docker Hub as a base image.

- It updates the Debian package database (apt-get update) and installs the Apache HTTPD server package from the Debian package repository.

- It uploads an example page (index.html) to the document root folder.

- It executes the: apachectl -D FOREGROUND command-line instruction to start the Apache HTTP server in foreground mode. The container's life-cycle is bound to the life-cycle of this foreground process.

- It informs Docker that the container listens to TCP port: 80. Connecting to port 80 makes it possible for a user to retrieve the example index.html page.

With the following command we can build the image:

$ docker build . -t debian-apache

Resulting in the following layers:

$ docker history debian-nginx:latest

IMAGE CREATED CREATED BY SIZE COMMENT

a72c04bd48d6 About an hour ago /bin/sh -c #(nop) EXPOSE 80/tcp 0B

325875da0f6d About an hour ago /bin/sh -c #(nop) CMD ["apachectl""-D""FO… 0B

35d9a1dca334 About an hour ago /bin/sh -c #(nop) ADD file:18aed37573327bee1… 129B

59ee7771f1bc About an hour ago /bin/sh -c apt-get install -y apache2 112MB

c355fe9a587f 2 hours ago /bin/sh -c apt-get update 17.4MB

ae8514941ea4 33 hours ago /bin/sh -c #(nop) CMD ["bash"] 0B

<missing> 33 hours ago /bin/sh -c #(nop) ADD file:89dfd7d3ed77fd5e0… 114MB

As may be observed, the base Debian Buster image and every change made in the

Dockerfile results in a new layer with a new hash code, as shown in the

IMAGE column.

Layers and Nix store paths share the similarity that they are immutable and they can both be identified with hash codes.

They are also different -- first, a Nix store path is the result of building a package or a static artifact, whereas a layer is the result of making a filesystem modification. Second, for a Nix store path, the hash code is derived from all inputs, whereas the hash code of a layer is derived from the output: its contents.

Furthermore, Nix store paths are always isolated because they reside in a unique directory (enforced by the hash prefixes), whereas a layer might have files that overlap with files in other layers. In Docker, when a conflict is encountered the files in the layer that gets added on top of it take precedence.

We can construct a second image using the same Debian Linux distribution image that runs Nginx with the following

Dockerfile:

FROM debian:buster

RUN apt-get update

RUN apt-get install -y nginx

ADD nginx.conf /etc

ADD index.html /var/www

CMD ["nginx", "-g", "daemon off;", "-c", "/etc/nginx.conf"]

EXPOSE 80/tcp

The above

Dockerfile looks similar to the previous, except that we install the Nginx package from the Debian package repository and we use a different command-line instruction to start Nginx in foreground mode.

When building the image, its storage will be optimized -- both images share the same base layer (the Debian Buster Linux base distribution):

$ docker history debian-nginx:latest

IMAGE CREATED CREATED BY SIZE COMMENT

b7ae6f38ae77 2 hours ago /bin/sh -c #(nop) EXPOSE 80/tcp 0B

17027888ce23 2 hours ago /bin/sh -c #(nop) CMD ["nginx""-g""daemon… 0B

41a50a3fa73c 2 hours ago /bin/sh -c #(nop) ADD file:18aed37573327bee1… 129B

0f5b2fdcb207 2 hours ago /bin/sh -c #(nop) ADD file:f18afd18cfe2728b3… 189B

e49bbb46138b 2 hours ago /bin/sh -c apt-get install -y nginx 64.2MB

c355fe9a587f 2 hours ago /bin/sh -c apt-get update 17.4MB

ae8514941ea4 33 hours ago /bin/sh -c #(nop) CMD ["bash"] 0B

<missing> 33 hours ago /bin/sh -c #(nop) ADD file:89dfd7d3ed77fd5e0… 114MB

If you compare the above output with the previous

docker history output, then you will notice that the bottom layer (last row) refers to the same layer using the same hash code behind the

ADD file: statement in the

CREATED BY column.

This ability to share the base distribution prevents us from storing another 114MB Debian Buster image, saving us storage and RAM.

Some common misconceptions

What I have noticed is that quite a few people compare containers to virtual machines (and even give containers that name, incorrectly suggesting that they are the same thing!).

A container is not a virtual machine, because it does not emulate or virtualize hardware -- virtual machines have a virtual CPU, virtual memory, virtual disk etc. that have similar capabilities and limitations as real hardware.

Furthermore, containers do not run a full operating system -- they run processes managed by the host system's Linux kernel. As a result, Docker containers will only deploy software that runs on Linux, and not software that was built for other operating systems.

(As a sidenote: Docker can also be used on Windows and macOS -- on these non-Linux platforms,

a virtualized Linux system is used for hosting the containers, but the containers themselves are not separated by using virtualization).

Containers cannot even be considered "light weight virtual machines".

The means to isolate containers from each other only apply to a limited number of potentially shared resources. For example, a resource that could not be unshared is the system's clock, although this may change in the near future, because in March 2020 a

time namespace has been added to the newest Linux kernel version. I believe this namespace is not yet offered as a generally available feature in Docker.

Moreover, namespaces, that normally provide separation/isolation between containers,

are objects and these objects can also be shared among multiple container instances (this is a uncommon use-case, because by default every container has its own private namespaces).

For example, it is also possible for two containers to share the same IPC namespace -- then processes in both containers will be able to communicate with each other with a shared-memory IPC mechanism, but they cannot do any IPC with processes on the host system or containers not sharing the same namespace.

Finally, certain system resources are not constrained by default unlike a virtual machine -- for example, a container is allowed to consume all the RAM of the host machine unless a RAM restriction has been configured. An unrestricted container could potentially affect the machine's stability as a whole and other containers running on the same machine.

A comparison of use cases

As mentioned in the introduction, when I show people Nix, then I often get a remark that it looks very similar to Docker.

In this section, I will compare some of their common use cases.

Managing services

In addition to building a Docker image, I believe the most common use case for Docker is to

manage services, such as custom REST API services (that are self-contained processes with an embedded web server), web servers or database management systems.

For example, after building an Nginx Docker image (as shown in the section about Docker concepts), we can also launch a container instance using the previously constructed image to serve our example HTML page:

$ docker run -p 8080:80 --name nginx-container -it debian-nginx

The above command create a new container instance using our Nginx image as a root file system and then starts the container in interactive mode -- the command's execution will block and display the output of the Nginx process on the terminal.

If we would omit the

-it parameters then the container will run in the background.

The

-p parameter configures a port forwarding from the host system to the container: traffic to the host system's port 8080 gets forwarded to port 80 in the container where the Nginx server listens to.

We should be able to see the example HTML page, by opening the following URL in a web browser:

http://localhost:8080

After stopping the container, its state will be retained. We can remove the container permanently, by running:

$ docker rm nginx-container

The Nix package manager has no equivalent use case for manging running processes, because its purpose is package management and not process/service life-cycle management.

However, some projects based on Nix, such as

NixOS: a Linux distribution built around the Nix package manager using a single declarative configuration file to capture a machine's configuration, generates

systemd unit files to manage services' life-cycles.

The Nix package manager can also be used on other operating systems, such as conventional Linux distributions, macOS and other UNIX-like systems. There is no universal solution that allows you to complement Nix with service manage support on all platforms that Nix supports.

Experimenting with packages

Another common use case is using Docker to experiment with packages that should not remain permanently installed on a system.

One way of doing this is by directly pulling a Linux distribution image (such as Debian Buster):

$ docker pull debian:buster

and then starting a container in an interactive shell session, in which we install the packages that we want to experiment with:

$ docker run --name myexperiment -it debian:buster /bin/sh

$ apt-get update

$ apt-get install -y file

# file --version

file-5.22

magic file from /etc/magic:/usr/share/misc/magic

The above example suffices to experiment with the

file package, but its deployment is not guaranteed to be reproducible.

For example, the result of running my

apt-get instructions shown above is file version 5.22. If I would, for example, run the same instructions a week later, then I might get a different version (e.g. 5.23).

The Docker-way of making such a deployment scenario reproducible, is by installing the packages in a

Dockerfile as part of the container's image construction process:

FROM debian:buster

RUN apt-get update

RUN apt-get install -y file

we can build the container image, with our

file package as follows:

$ docker build . -t file-experiment

and then deploy a container that uses that image:

$ docker run --name myexperiment -it debian:buster /bin/sh

As long as we deploy a container with the same image, we will always have the same version of the

file executable:

$ docker run --name myexperiment -it file-experiment /bin/sh

# file --version

file-5.22

magic file from /etc/magic:/usr/share/misc/magic

With Nix,

generating reproducible development environments with packages is a first-class feature.

For example, to launch a shell session providing the

file package from the Nixpkgs collection, we can simply run:

$ nix-shell -p file

$ file --version

file-5.39

magic file from /nix/store/j4jj3slm15940mpmympb0z99a2ghg49q-file-5.39/share/misc/magic

As long as the Nix expression sources remain the same (e.g. the Nix channel is not updated, or

NIX_PATH is hardwired to a certain Git revision of Nixpkgs), the deployment of the development environment is reproducible -- we should always get the same

file package with the same Nix store path.

Building development projects/arbitrary packages

As shown in the section about Nix's concepts, one of Nix's key features is to generate build environments for building packages and other software projects. I have shown that with a simple Nix expression consisting of only a few lines of code, we can build the

file package from source code and its build dependencies in such a dedicated build environment.

In Docker, only building images is a first-class concept. However, building arbitrary software projects and packages is also something you can do by using Docker containers in a specific way.

For example, we can create a bash script that builds the same example package (

file) shown in the section that explains Nix's concepts:

#!/bin/bash -e

mkdir -p /build

cd /build

wget ftp://ftp.astron.com/pub/file/file-5.38.tar.gz

tar xfv file-5.38.tar.gz

cd file-5.38

./configure --prefix=/opt/file

make

make install

tar cfvz /out/file-5.38-binaries.tar.gz /opt/file

Compared to its Nix expression counterpart, the build script above does not use any abstractions -- as a consequence, we have to explicitly write all steps that executes the required build steps to build the package:

- Create a dedicated build directory.

- Download the source tarball from the FTP server

- Unpack the tarball

- Execute the standard GNU Autotools build procedure: ./configure; make; make install and install the binaries in an isolated folder (/opt/file).

- Create a binary tarball from the /opt/file folder and store it in the /out directory (that is a volume shared between the container and the host system).

To create a container that runs the build script and to provide its dependencies in a reproducible way, we need to construct an image from the following

Dockerfile:

FROM debian:buster

RUN apt-get update

RUN apt-get install -y wget gcc make libz-dev

ADD ./build.sh /

CMD /build.sh

The above Dockerfile builds an image using the Debian Buster Linux distribution, installs all mandatory build utilities (

wget,

gcc, and

make) and library dependencies (

libz-dev), and executes the build script shown above.

With the following command, we can build the image:

$ docker build . -t buildenv

and with the following command, we can create and launch the container that executes the build script (and automatically discard it as soon as it finishes its task):

$ docker run -v $(pwd)/out:/out --rm -t buildenv

To make sure that we can keep our resulting binary tarball after the container gets discarded, we have created a shared volume that maps the

out directory in our current working directory onto the

/out directory in the container.

When the build script finishes, the output directory should contain our generated binary tarball:

ls out/

file-5.38-binaries.tar.gz

Although both Nix and Docker both can provide reproducible environments for building packages (in the case of Docker, we need to make sure that all dependencies are provided by the Docker image), builds performed in a Docker container are not guaranteed to be pure, because it does not take the same precautions that Nix takes:

- In the build script, we download the source tarball without checking its integrity. This might cause an impurity, because the tarball on the remote server could change (this could happen for non-mallicious as well as mallicous reasons).

- While running the build, we have unrestricted network access. The build script might unknowingly download all kinds of undeclared/unknown dependencies from external sites whose results are not deterministic.

- We do not reset any timestamps -- as a result, when performing the same build twice in a row, the second result might be slightly different because of the timestamps integrated in the build product.

Coping with these impurities in a Docker workflow is the responsibility of the build script implementer. With Nix, most of it is transparently handled for you.

Moreover, the build script implementer is also responsible to retrieve the build artifact and store it somewhere, e.g. in a directory outside the container or uploading it to a remote artifactory repository.

In Nix, the result of a build process is automatically stored in isolation in the Nix store. We can also quite easily turn a Nix store into a binary cache and let other Nix consumers download from it, e.g. by installing

nix-serve,

Hydra: the Nix-based continuous integration service,

cachix, or by

manually generating a static binary cache.

Beyond the ability to execute builds, Nix has another great advantage for building packages from source code. On Linux systems, the Nixpkgs collection is entirely

bootstrapped, except for the bootstrap binaries -- this provides us almost full traceability of all dependencies and transitive dependencies used at build-time.

With Docker you typically do not have such insights -- images get constructed from binaries obtained from arbitrary locations (e.g. binary packages that originate from Linux distributions' package repositories). As a result, it is impossible to get any insights on how these package dependencies were constructed from source code.

For most people, knowing exactly from which sources a package has been built is not considered important, but it can still be useful for more specialized use cases. For example, to determine if your system is constructed from trustable/audited sources and

whether you did not violate a license of a third-party library.

Combined use cases

As explained earlier in this blog post, Nix and Docker are deployment solutions for sightly different application domains.

There are quite a few solutions developed by the Nix community that can combine Nix and Docker in interesting ways.

In this section, I will show some of them.

Experimenting with the Nix package manager in a Docker container

Since Docker is such a common solution to provide environments in which users can experiment with packages, the Nix community also provides a

Nix Docker image, that allows you to conveniently experiment with the Nix package manager in a Docker container.

We can pull this image as follows:

$ docker pull nixos/nix

Then launch a container interactively:

$ docker run -it nixos/nix

And finally, pull the package specifications from the Nix channel and install any Nix package that we want in the container:

$ nix-channel --add https://nixos.org/channels/nixpkgs-unstable nixpkgs

$ nix-channel --update

$ nix-env -f '<nixpkgs>' -iA file

$ file --version

file-5.39

magic file from /nix/store/bx9l7vrcb9izgjgwkjwvryxsdqdd5zba-file-5.39/share/misc/magic

Using the Nix package manager to deliver the required packages to construct an image

In the examples that construct Docker images for Nginx and the Apache HTTP server, I use the Debian Buster Linux distribution as base images in which I add the required packages to run the services from the Debian package repository.

This is a common practice to construct Docker images -- as I have already explained in section that covers its concepts, package management is a sub problem of the process/service life-cycle management problem, but Docker leaves solving this problem to the Linux distribution's package manager.

Instead of using conventional Linux distributions and their package management solutions, such as Debian, Ubuntu (using

apt-get), Fedora (using

yum) or Alpine Linux (using

apk), it is also possible to use Nix.

The following

Dockerfile can be used to create an image that uses Nginx deployed by the Nix package manager:

FROM nixos/nix

RUN nix-channel --add https://nixos.org/channels/nixpkgs-unstable nixpkgs

RUN nix-channel --update

RUN nix-env -f '<nixpkgs>' -iA nginx

RUN mkdir -p /var/log/nginx /var/cache/nginx /var/www

ADD nginx.conf /etc

ADD index.html /var/www

CMD ["nginx", "-g", "daemon off;", "-c", "/etc/nginx.conf"]

EXPOSE 80/tcp

Using Nix to build Docker images

Ealier, I have shown that the Nix package manager can also be used in a

Dockerfile to obtain all required packages to run a service.

In addition to building software packages, Nix can also build all kinds of static artifacts, such as disk images, DVD ROM ISO images, and virtual machine configurations.

The Nixpkgs repository also contains

an abstraction function to build Docker images that does not require any Docker utilities.

For example, with the following Nix expression, we can build a Docker image that deploys Nginx:

with import <nixpkgs> {};

dockerTools.buildImage {

name = "nginxexp";

tag = "test";

contents = nginx;

runAsRoot = ''

${dockerTools.shadowSetup}

groupadd -r nogroup

useradd -r nobody -g nogroup -d /dev/null

mkdir -p /var/log/nginx /var/cache/nginx /var/www

cp ${./index.html} /var/www/index.html

'';

config = {

Cmd = [ "${nginx}/bin/nginx""-g""daemon off;""-c" ./nginx.conf ];

Expose = {

"80/tcp" = {};

};

};

}

The above expression propagates the following parameters to the

dockerTools.buildImage function:

- The name of the image is: nginxexp using the tag: test.

- The contents parameter specifies all Nix packages that should be installed in the Docker image.

- The runAsRoot refers to a script that runs as root user in a QEMU virtual machine. This virtual machine is used to provide the dynamic parts of a Docker image, setting up user accounts and configuring the state of the Nginx service.

- The config parameter specifies image configuration properties, such as the command to execute and which TCP ports should be exposed.

Running the following command:

$ nix-build

/nix/store/qx9cpvdxj78d98rwfk6a5z2qsmqvgzvk-docker-image-nginxexp.tar.gz

Produces a compressed tarball that contains all files belonging to the Docker image. We can load the image into Docker with the following command:

$ docker load -i \

/nix/store/qx9cpvdxj78d98rwfk6a5z2qsmqvgzvk-docker-image-nginxexp.tar.gz

and then launch a container instance that uses the Nix-generated image:

$ docker run -p 8080:80/tcp -it nginxexp:test

When we look at the Docker images overview:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginxexp test cde8298f025f 50 years ago 61MB

There are two properties that stand out when you compare the Nix generated Docker image to conventional Docker images:

- The first odd property is that the overview says that the image that was created 50 years ago. This is explainable: to make Nix builds pure and deterministic, time stamps are typically reset to 1 second after the epoch (Januarty 1st 1970), to ensure that we always get the same bit-identical build result.

- The second property is the size of the image: 61MB is considerably smaller than our Debian-based Docker image.

To give you a comparison: the docker history command-line invocation (shown earlier in this blog post) that displays the layers of which the Debian-based Nginx image consists, shows that the base Linux distribution image consumes 114 MB, the update layer 17.4 MB and the layer that provides the Nginx package is 64.2 MB.

The reason why Nix-generated images are so small is because Nix exactly knows all runtime dependencies required to run Nginx. As a result, we can restrict the image to only contain Nginx and its required runtime dependencies, leaving all unnecessary software out.

The Debian-based Nginx container is much bigger, because it also contains a base Debian Linux system with all kinds of command-line utilities and libraries, that are not required to run Nginx.

The same limitation also applies to the Nix Docker image shown in the previous sections -- the Nix Docker image was constructed from an Alpine Linux image and contains a small, but fully functional Linux distribution. As a result, it is bigger than the Docker image directly generated from a Nix expression.

Although a Nix-generated Docker image is smaller than most conventional images, one of its disadvantages is that the image consists of only one single layer -- as we have seen in the section about Nix concepts, many services typically share the same runtime dependencies (such as

glibc). Because these common dependencies are not in a reusable layer, they cannot be shared.

To optimize reuse, it is also possible to

build layered Docker images with Nix:

with import <nixpkgs> {};

dockerTools.buildLayeredImage {

name = "nginxexp";

tag = "test";

contents = nginx;

maxLayers = 100;

extraCommands = ''

mkdir -p var/log/nginx var/cache/nginx var/www

cp ${./index.html} var/www/index.html

'';

config = {

Cmd = [ "${nginx}/bin/nginx""-g""daemon off;""-c" ./nginx.conf ];

Expose = {

"80/tcp" = {};

};

};

}

The above Nix expression is similar to the previous. but uses

dockerTools.buildLayeredImage to construct a layered image.

We can build and load the image as follows:

$ docker load -i $(nix-build layered.nix)

When we retieve the history of the image, then we will see the following:

$ docker history nginxexp:test

IMAGE CREATED CREATED BY SIZE COMMENT

b91799a04b99 50 years ago 1.47kB store paths: ['/nix/store/snxpdsksd4wxcn3niiyck0fry3wzri96-nginxexp-customisation-layer']

<missing> 50 years ago 200B store paths: ['/nix/store/6npz42nl2hhsrs98bq45aqkqsndpwvp1-nginx-root.conf']

<missing> 50 years ago 1.79MB store paths: ['/nix/store/qsq6ni4lxd8i4g9g4dvh3y7v1f43fqsp-nginx-1.18.0']

<missing> 50 years ago 71.3kB store paths: ['/nix/store/n14bjnksgk2phl8n69m4yabmds7f0jj2-source']

<missing> 50 years ago 166kB store paths: ['/nix/store/jsqrk045m09i136mgcfjfai8i05nq14c-source']

<missing> 50 years ago 1.3MB store paths: ['/nix/store/4w2zbpv9ihl36kbpp6w5d1x33gp5ivfh-source']

<missing> 50 years ago 492kB store paths: ['/nix/store/kdrdxhswaqm4dgdqs1vs2l4b4md7djma-pcre-8.44']

<missing> 50 years ago 4.17MB store paths: ['/nix/store/6glpgx3pypxzb09wxdqyagv33rrj03qp-openssl-1.1.1g']

<missing> 50 years ago 385kB store paths: ['/nix/store/7n56vmgraagsl55aarx4qbigdmcvx345-libxslt-1.1.34']

<missing> 50 years ago 324kB store paths: ['/nix/store/1f8z1lc748w8clv1523lma4w31klrdpc-geoip-1.6.12']

<missing> 50 years ago 429kB store paths: ['/nix/store/wnrjhy16qzbhn2qdxqd6yrp76yghhkrg-gd-2.3.0']

<missing> 50 years ago 1.22MB store paths: ['/nix/store/hqd0i3nyb0717kqcm1v80x54ipkp4bv6-libwebp-1.0.3']

<missing> 50 years ago 327kB store paths: ['/nix/store/79nj0nblmb44v15kymha0489sw1l7fa0-fontconfig-2.12.6-lib']

<missing> 50 years ago 1.7MB store paths: ['/nix/store/6m9isbbvj78pjngmh0q5qr5cy5y1kzyw-libxml2-2.9.10']

<missing> 50 years ago 580kB store paths: ['/nix/store/2xmw4nxgfximk8v1rkw74490rfzz2gjp-libtiff-4.1.0']

<missing> 50 years ago 404kB store paths: ['/nix/store/vbxifzrl7i5nvh3h505kyw325da9k47n-giflib-5.2.1']

<missing> 50 years ago 79.8kB store paths: ['/nix/store/jc5bd71qcjshdjgzx9xdfrnc9hsi2qc3-fontconfig-2.12.6']

<missing> 50 years ago 236kB store paths: ['/nix/store/9q5gjvrabnr74vinmjzkkljbpxi8zk5j-expat-2.2.8']

<missing> 50 years ago 482kB store paths: ['/nix/store/0d6vl8gzwqc3bdkgj5qmmn8v67611znm-xz-5.2.5']

<missing> 50 years ago 6.28MB store paths: ['/nix/store/rmn2n2sycqviyccnhg85zangw1qpidx0-gcc-9.3.0-lib']

<missing> 50 years ago 1.98MB store paths: ['/nix/store/fnhsqz8a120qwgyyaiczv3lq4bjim780-freetype-2.10.2']

<missing> 50 years ago 757kB store paths: ['/nix/store/9ifada2prgfg7zm5ba0as6404rz6zy9w-dejavu-fonts-minimal-2.37']

<missing> 50 years ago 1.51MB store paths: ['/nix/store/yj40ch9rhkqwyjn920imxm1zcrvazsn3-libjpeg-turbo-2.0.4']

<missing> 50 years ago 79.8kB store paths: ['/nix/store/1lxskkhsfimhpg4fd7zqnynsmplvwqxz-bzip2-1.0.6.0.1']

<missing> 50 years ago 255kB store paths: ['/nix/store/adldw22awj7n65688smv19mdwvi1crsl-libpng-apng-1.6.37']

<missing> 50 years ago 123kB store paths: ['/nix/store/5x6l9xm5dp6v113dpfv673qvhwjyb7p5-zlib-1.2.11']

<missing> 50 years ago 30.9MB store paths: ['/nix/store/bqbg6hb2jsl3kvf6jgmgfdqy06fpjrrn-glibc-2.30']

<missing> 50 years ago 209kB store paths: ['/nix/store/fhg84pzckx2igmcsvg92x1wpvl1dmybf-libidn2-2.3.0']

<missing> 50 years ago 1.63MB store paths: ['/nix/store/y8n2b9nwjrgfx3kvi3vywvfib2cw5xa6-libunistring-0.9.10']

As you may notice, all Nix store paths are in their own layers. If we would also build a layered Docker image for the Apache HTTP service, we end up using less disk space (because common dependencies such as

glibc can be reused), and less RAM (because these common dependencies can be shared in RAM).

Mapping Nix store paths onto layers obviously has limitations -- there is a maximum number of layers that Docker can use (in the Nix expression, I have imposed a limit of 100 layers, recent versions of Docker support a somewhat higher number).

Complex systems packaged with Nix typically have much more dependencies than the number of layers that Docker can mount. To cope with this limitation, the

dockerTools.buildLayerImage abstraction function tries to merge infrequently used dependencies into a shared layers. More information about this process can be found in Graham Christensen's blog post.

Besides the use cases shown in the examples above,

there is much more you can do with the dockerTools functions in Nixpkgs -- you can also pull images from Docker Hub (with the

dockerTools.pullImage function) and use the

dockerTools.buildImage function to use existing Docker images as a basis to create hybrids combining conventional Linux software with Nix packages.

Conclusion

In this blog post, I have elaborated about using Nix and Docker as deployment solutions.

What they both have in common is that they facilitate reliable and reproducible deployment.

They can be used for a variety of use cases in two different domains (package management and process/service management). Some of these use cases are common to both Nix and Docker.

Nix and Docker can also be combined in several interesting ways -- Nix can be used as a package manager to deliver package dependencies in the construction process of an image, and Nix can also be used directly to build images, as a replacement for Dockerfiles.

This table summarizes the conceptual differences between Nix and Docker covered in this blog post:

| Nix | Docker |

|---|

| Application domain | Package management | Process/service management |

|---|

| Storage units | Package build results | File system changes |

|---|

| Storage model | Isolated Nix store paths | Layers + union file system |

|---|

| Component addressing | Hashes computed from inputs | Hashes computed from a layer's contents |

|---|

| Service/process management | Unsupported | First-class feature |

|---|

| Package management | First class support | Delegated responsibility to a distro's package manager |

|---|

| Development environments | nix-shell | Create image with dependencies + run shell session in container |

|---|

| Build management (images) | Dockerfile | dockerTools.buildImage {}

dockerTools.buildLayeredImage {} |

|---|

| Build management (packages) | First class function support | Implementer's responsibility, can be simulated |

|---|

| Build environment purity | Many precautions taken | Only images provide some reproducibility, implementer's responsibility |

|---|

| Full source traceability | Yes (on Linux) | No |

|---|

| OS support | Many UNIX-like systems | Linux (real system or virtualized) |

|---|

I believe the last item in the table deserves a bit of clarification -- Nix works on other operating systems than Linux, e.g. macOS, and can also deploy binaries for those platforms.

Docker can be used on Windows and macOS, but it still deploys Linux software -- on Windows and macOS containers are deployed to a virtualized Linux environment. Docker containers can only work on Linux, because they heavily rely on Linux-specific concepts: namespaces and cgroups.

Aside from the functional parts, Nix and Docker also have some fundamental non-functional differences. One of them is usability.

Although I am a long-time Nix user (since 2007). Docker is very popular because it is well-known and provides quite an optimized user experience. It does not deviate much from the way traditional Linux systems are managed -- this probably explains why so many users incorrectly call containers "virtual machines", because they manifest themselves as units that provide almost fully functional Linux distributions.

From

my own experiences, it is typically more challenging to convince a new audience to adopt Nix -- getting an audience used to the fact that a package build can be modeled as a pure function invocation (in which the function parameters are a package's build inputs) and that a specialized Nix store is used to store all static artifacts, is sometimes difficult.

Both Nix and Docker support reuse: the former by means of using identical Nix store paths and the latter by using identical layers. For both solutions, these objects can be identified with hash codes.

In practice, reuse with Docker is not always optimal -- for frequently used services, such as Nginx and Apache HTTP server, is not a common practice to manually derive these images from a Linux distribution base image.

Instead, most Docker users will obtain specialized Nginx and Apache HTTP images. The

official Docker Nginx images are constructed from Debian Buster and Alpine Linux, whereas

the official Apache HTTP images only support Alpine Linux. Sharing common dependencies between these two images will only be possible if we install the Alpine Linux-based images.

In practice, it happens quite frequently that people run images constructed from all kinds of different base images, making it very difficult to share common dependencies.

Another impractical aspect of Nix is that it works conveniently for software compiled from source code, but packaging and deploying pre-built binaries is typically a challenge -- ELF binaries typically do not work out of the box and

need to be patched, or deployed to

an FHS user environment in which dependencies can be found in their "usual" locations (e.g.

/bin,

/lib etc.).

Related work

In this blog post, I have restricted my analysis to Nix and Docker. Both tools are useful on their own, but they are also the foundations of entire solution eco-systems. I did not elaborate much about solutions in these extended eco-systems.

For example, Nix does not do any process/service management, but there are Nix-related projects that can address this concern. Most notably: NixOS: a Linux-distribution fully managed by Nix, uses systemd to manage services.

For Nix users on macOS, there is a project called

nix-darwin that integrates with

launchd, which is the default service manager on macOS.

There also used to be an interesting cross-over project between Nix and Docker (called

nix-docker) combining the Nix's package management capabilities, with Docker's isolation capabilities, and

supervisord's ability to manage multiple services in a container -- it takes a configuration file (that looks similar to a NixOS configuration) defining a set of services, fully generates a supervisord configuration (with all required services and dependencies) and deploys them to a container. Unfortunately, the project is no longer maintained.

Nixery is a Docker-compatible container registry that is capable of transparently building and serving container images using Nix.

Docker is also an interesting foundation for an entire eco-system of solutions. Most notably Kubernetes, a container-orchestrating system that works with a variety of container tools including Docker.

docker-compose makes it possible to manage collections of Docker containers and dependencies between containers.

There are also many solutions available to make building development projects with Docker (and other container technologies) more convenient than my

file package build example.

Gitlab CI, for example, provides first-class Docker integration.

Tekton is a Kubernetes-based framework that can be used to build CI/CD systems.

There are also quite a few Nix cross-over projects that integrate with the extended containers eco-system, such as Kubernetes and

docker-compose. For example,

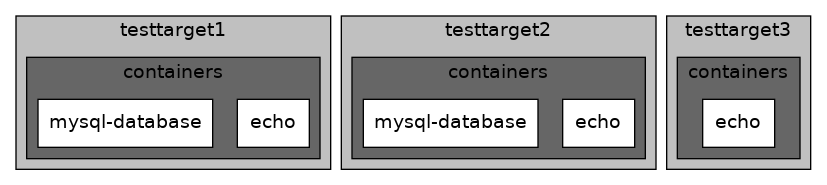

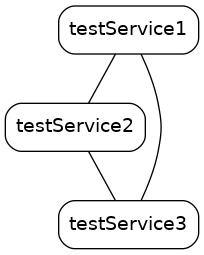

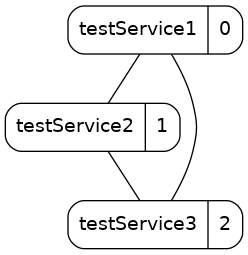

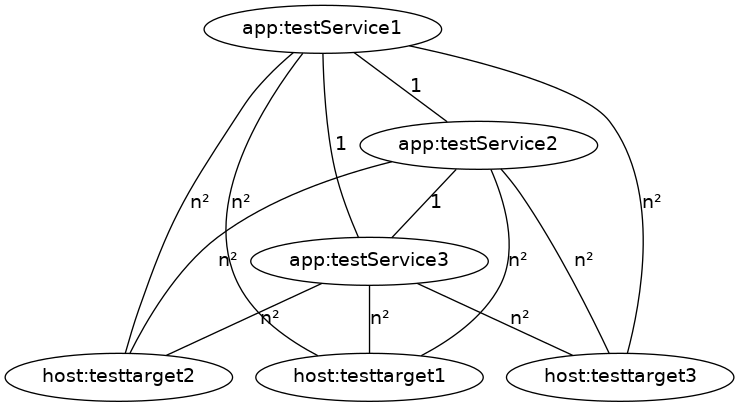

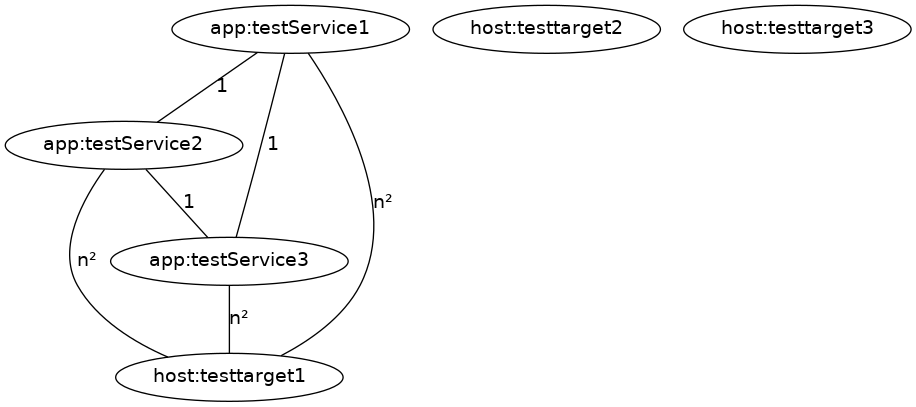

arion can generate

docker-compose configuration files with specialized containers from NixOS modules.

KuberNix can be used to bootstrap a Kubernetes cluster with the Nix package manager, and

Kubenix can be used to build Kubernetes resources with Nix.

As explained in my comparisons, package management is not something that Docker supports as a first-class feature, but Docker has been an inspiration for package management solutions as well.

Most notably, several years ago

I did a comparison between Nix and Ubuntu's Snappy package manager. The latter deploys every package (and all its required dependencies) as a container.

In this comparison blog post, I raised a number of concerns about reuse. Snappy does not have any means to share common dependencies between packages, and as a result, Snaps can be quite disk space and memory consuming.

Flatpak can be considered an alternative and more open solution to Snappy.

I still do not understand why these Docker-inspired package management solutions have not used Nix (e.g. storing packages in insolated folders) or Docker (e.g. using layers) as an inspiration to optimize reuse and simplify the construction of packages.

Future work

In the next blog post, I will elaborate more about integrating the Nix package manager with tools that can address the process/service management concern.